Reflection AI has shaken up the AI world with two powerful new language models. The Reflection 70B is now the top open-source model out there, and the upcoming Reflection 405B is expected to beat every other model, open or closed-source. With their new Reflection-Tuning technology, these models are set to change how we use and think about AI.

Reflection 70B: The World’s Leading Open-Source LLM

Reflection 70B has quickly made headlines as the most powerful open-source LLM available today. The model was trained using Reflection-Tuning technology, a cutting-edge technique that allows it to detect errors in its reasoning processes and correct them in real-time. This unique ability greatly improves the model’s performance, accuracy, and reliability across a wide range of tasks.

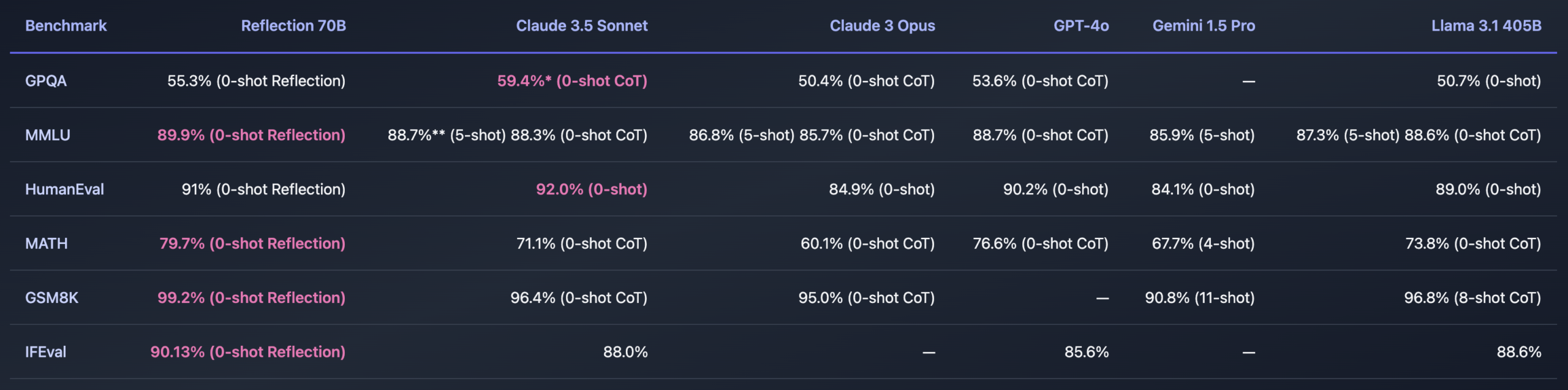

Benchmark Performance

Reflection 70B has shown exceptional performance in several well-known benchmarks that test reasoning, problem-solving, and comprehension capabilities. For instance, the model achieved the following results in recent tests:

- GPQA (General Purpose Question Answering): 55.3% (0-shot Reflection)

- MMLU (Massive Multitask Language Understanding): 89.9% (0-shot Reflection)

- HumanEval (Python Code Generation): 91% (0-shot Reflection)

- MATH (Mathematical Problem Solving): 79.7% (0-shot Reflection)

- GSM8K (Grade School Math Problems): 99.2% (0-shot Reflection)

These scores demonstrate how Reflection 70B outperforms other leading open-source models and even rivals several top closed-source models like Claude 3.5 Sonnet and GPT-4o. This is a remarkable achievement for an open-source LLM, which has been traditionally viewed as less competitive compared to proprietary models.

Innovative Reflection-Tuning Technology

At the core of Reflection 70B’s success is the Reflection-Tuning technology. This advanced mechanism enables the model to correct its own reasoning mid-task. By identifying mistakes and course-correcting in real-time, Reflection-Tuning significantly reduces common issues like AI hallucinations and incorrect conclusions.

Reflection-Tuning also allows the model to “reflect” on its reasoning process, ensuring that its responses are well thought out before delivering final answers. This is particularly beneficial in complex tasks such as programming, mathematics, and logical problem-solving.

The Reflection-Tuning process works within specially designed tokens embedded in the model. For example, the model outputs its internal reasoning process within <thinking> tags and makes corrections inside <reflection> tags before delivering the final answer between <output> tags. This innovative design ensures that the model separates its thought process from its output, giving users a transparent view of how it arrives at conclusions.

Wide Applications and User Benefits

Reflection 70B is well-suited for a variety of applications across industries. Its ability to self-correct makes it a valuable tool for fields such as:

- Data Science: Helping data scientists interpret complex data sets, automate tasks, and reduce the risk of misinterpretation.

- AI Research and Development: Offering a powerful platform for developing next-gen AI systems with enhanced problem-solving capabilities.

- Business Analytics: Providing detailed analysis and reasoning that can support decision-making in business environments.

- Education: Enabling educators and students to engage with advanced AI models for learning and problem-solving tasks.

Its robust self-correcting abilities make Reflection 70B an ideal choice for researchers, developers, and educators looking to explore and leverage AI technology.

Coming Soon: Reflection 405B

While Reflection 70B is already leading the way, the upcoming Reflection 405B model is expected to revolutionize the AI space even further. Reflection 405B is slated to be the world’s most powerful LLM, surpassing not only other open-source models but also top closed-source models such as GPT-4o and Claude 3.5 Sonnet.

Reflection AI’s team has hinted that Reflection 405B will feature the same self-correcting technology, but with a vastly larger parameter size—405 billion parameters compared to 70 billion. This increase in model size is expected to significantly boost its performance, making it more adept at handling even the most complex tasks and queries.

Although the specific benchmarks for Reflection 405B have not yet been released, expectations are high. AI enthusiasts, researchers, and businesses are eagerly awaiting its release, anticipating that it will set new performance records and open new possibilities in AI-driven applications.

Why Reflection AI Is Leading the Way

Reflection AI’s rapid rise in the LLM space is largely attributed to its innovative approach and commitment to open-source technology. Unlike many of its competitors, which lock their best models behind closed-source barriers, Reflection AI is making cutting-edge AI tools accessible to a wide audience.

Here’s why Reflection AI stands out:

- Advanced Reflection-Tuning Technology: By allowing the model to detect and correct its own reasoning mistakes, Reflection-Tuning drastically improves performance, particularly in tasks requiring logical reasoning, mathematical accuracy, and code generation.

- Top-Tier Open-Source Performance: Reflection 70B is the highest-performing open-source LLM on the market, rivaling even the best closed-source models.

- Wide Application Potential: Reflection AI models are versatile and can be applied in various fields, from academic research to business operations.

- Accessible to Everyone: As an open-source model, Reflection 70B is available for download and use by anyone, whether they are developers, researchers, or hobbyists.

Getting the Best Results with Reflection 70B

To optimize the performance of Reflection 70B, the developers recommend specific settings during use. A temperature setting of 0.7 and a top_p setting of 0.95 provide the best balance of accuracy and diversity in responses. Additionally, users can prompt the model with “Think carefully.” to further improve the accuracy of its answers.

The model is available for use on Hugging Face and other AI platforms, although high demand has occasionally caused server issues. Reflection AI is working to address these issues to ensure that the model remains accessible to all users.

Looking Ahead: The Future of AI with Reflection AI

Reflection AI’s release of the 70B model is just the beginning. The upcoming Reflection 405B is poised to redefine the limits of AI technology. With its larger parameter size and improved self-correction capabilities, Reflection 405B is expected to be the best-performing LLM in the world, including closed-source models.

This rapid pace of development highlights the growing importance of AI technology in today’s world. As AI models like Reflection 70B and Reflection 405B continue to evolve, they will play an increasingly critical role in research, education, business, and more.

Final Thoughts

Reflection AI is pushing the boundaries of what’s possible with LLM technology. The Reflection 70B model has already set a new standard in open-source AI, and the upcoming Reflection 405B is expected to elevate AI capabilities to unprecedented levels.

With its self-correcting abilities, these models are not only powerful tools for AI developers and researchers but also accessible resources for anyone interested in exploring the future of artificial intelligence.

Whether you are an AI enthusiast, data scientist, or business leader, Reflection AI offers cutting-edge tools to help you stay ahead in an increasingly AI-driven world. Keep an eye on the release of Reflection 405B, as it promises to be a game-changer in the AI landscape.

[…] data in a vector database. This allows data teams to focus on providing relevant information for AI models without the need for a complex tool […]

[…] like analyzing data, proposing new models, and improving existing ones. This makes it easier for AI models to learn and get better over time. It can automatically read research papers and financial reports, […]