OpenAI has recently launched four new APIs, each designed to address different needs and use cases in the world of artificial intelligence. These APIs offer various features that can enhance applications, streamline workflows, and improve user interactions.

In this article, we will look at each announcement in detail to help you determine which API is the right fit for your projects.

APIs Overview

Here’s a comparison table for the four APIs based on their key features, capabilities, and unique offerings:

| API | Key Features | Unique Capabilities | Best Use Cases | Pricing |

|---|---|---|---|---|

| ChatGPT API | – Text generation – Conversational capabilities – Contextual understanding |

– Personalized interactions – Multi-turn conversations |

– Customer support – Virtual assistants – Content creation |

Tiered pricing based on usage |

| DALL·E API | – Image generation from text prompts – Style transfer and variations – Image editing capabilities |

– High-quality image synthesis – Supports various artistic styles |

– Graphic design – Art generation – Marketing materials |

Pay-per-use model |

| Whisper API | – Speech-to-text transcription – Multilingual support – Noise robustness |

– Real-time audio processing – Custom vocabulary support |

– Transcription services – Voice assistants – Accessibility |

Tiered pricing based on usage |

| Multi-modal API | – Combined text and image input handling – Enhanced contextual understanding |

– Unified responses to both text and image inputs – Supports interactive workflows |

– Educational tools – Visual content generation – Enhanced customer service |

Tiered pricing based on usage |

Pricing: Pricing models may vary based on the amount of usage, number of tokens, or other metrics. Developers are encouraged to refer to the specific API documentation for detailed pricing structures.

Use Cases: Each API can be used across various industries, but the mentioned use cases highlight their most effective applications.

Announcement 1: Model Distillation in the API

What is Model Distillation?

Model distillation is a process that helps developers create smaller, more cost-effective AI models that perform as well as larger, more powerful models. This is done by using the outputs of these larger models to train the smaller ones.

What’s New?

OpenAI has introduced a new tool called Model Distillation. This allows developers to easily manage the entire process of training smaller models directly on the OpenAI platform, making it easier and less error-prone than before.

Key Features:

- Stored Completions: This feature allows developers to automatically save the input-output examples generated by powerful models like GPT-4o. These examples can then be used to train the smaller models. It makes building datasets much easier.

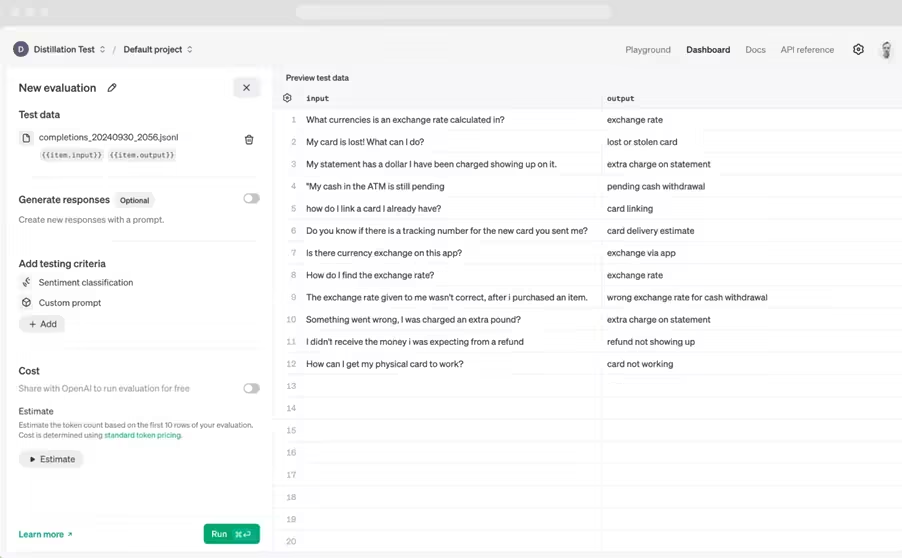

- Evals: This is a new tool for developers to measure how well their models are performing. Instead of creating separate evaluation scripts, developers can use Evals to run tests directly on the OpenAI platform. It can use data from Stored Completions or any other datasets developers provide.

- Fine-tuning: The tools for Stored Completions and Evals work together with existing fine-tuning features. This means developers can use saved examples to improve their smaller models and test their performance all in one place.

How to Use It:

- Start by creating an evaluation to check how well the smaller model (like GPT-4o mini) is performing.

- Use the Stored Completions feature to create a dataset with real-world examples generated by a larger model (like GPT-4o).

- Finally, use this dataset to train the smaller model. After fine-tuning, run evaluations again to see if the model meets performance standards.

Availability and Costs:

- The Model Distillation tools are available to all developers.

- OpenAI is currently offering 2 million free training tokens daily for the smaller models until October 31, 2024. After this, regular prices will apply.

- Stored Completions are free to use, but Evals are in beta and will cost based on usage, although there are free evaluation options available until the end of the year for developers who share their evaluations with OpenAI.

For more details, check out their [Model Distillation documentation].

Announcement 2: Introducing the Realtime API

What’s New?

Realtime API is a tool for developers that allows them to build applications where people can have real-time, natural conversations using speech. This tool is currently in public beta for all paid developers.

Key Features:

- Speech-to-Speech Conversations: The API allows users to speak and have the application respond back in speech, just like having a conversation.

- Easy Integration: Developers no longer need to use multiple different tools to handle voice interactions. They can do everything with a single API call, making it simpler to create engaging voice experiences.

- Audio Capabilities: Along with the Realtime API, developers can also use audio features in the Chat Completions API, which will be available soon.

How It Works:

- In the past, creating a voice assistant required several steps: converting speech to text, processing that text, and then converting the response back to speech. The new API streamlines this process, allowing for smoother conversations with less delay.

- It can also handle interruptions in conversations, making interactions feel more natural.

Availability and Pricing:

- The Realtime API is available for all paid developers to start using right away.

- The pricing structure for using the API is based on the amount of text and audio processed. For Example, audio input costs about $0.06 per minute.

Getting Started:

Developers can start using the Realtime API soon through the OpenAI platform and get support from documentation and libraries designed for this API.

The team plans to add more features, like support for other types of media (like video), allow more simultaneous users, and integrate better with existing development tools.

Announcement 3: Introducing vision to the fine-tuning API

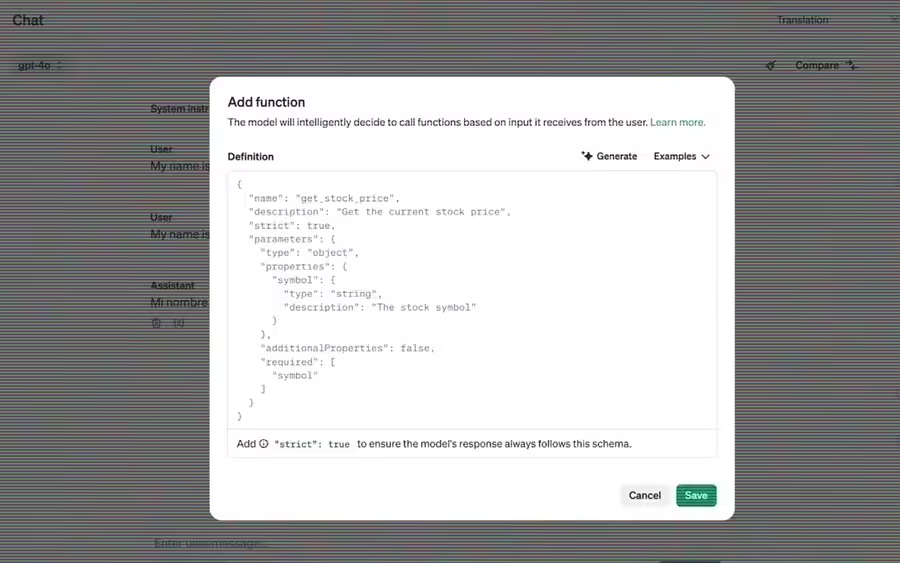

The announcement introduces new features for developers working with the GPT-4o model, allowing them to improve the model’s ability to understand and work with images, not just text.

What’s New:

With the help of Vision Fine-Tuning developers can now fine-tune the GPT-4o model using both images and text. This means they can make the model better at recognizing and analyzing images, which is useful for various applications.

Why It Matters:

- Improved Applications: This capability can enhance tasks like:

- Visual Search: Finding images or products more effectively.

- Object Detection: Helping self-driving cars or smart city systems identify objects accurately.

- Medical Analysis: Analyzing medical images more reliably.

How It Works:

Developers prepare image datasets and can improve the model’s performance with as few as 100 images. The process is similar to how they’ve been fine-tuning the model using text.

Examples of Use:

- Grab: A delivery and rideshare company that improved its mapping data using images collected from drivers. They achieved better accuracy in recognizing traffic signs and counting lane dividers.

- Automat: A company that automates business processes and used fine-tuning to significantly increase the success rate of its automated systems.

- Coframe: An AI tool that helps businesses create websites more effectively by improving its ability to generate consistent visual styles and layouts.

Availability and Pricing:

Vision fine-tuning is available to all developers on paid plans, with some free training tokens offered until the end of October 2024. After that, there will be costs associated with training and using the model.

How to Get Started?

To get started, visit the fine-tuning dashboard(opens in a new window), click ‘create’ and select gpt-4o-2024-08-06 from the base model drop-down. To learn how to fine-tune GPT-4o with images, visit our docs(opens in a new window).

Announcement 4: Prompt Caching in the API

The announcement is about Prompt Caching, a new feature for developers using the API that helps them save money and speed up their applications. Here’s a breakdown of what it means in simple terms:

What is Prompt Caching?

When developers often use the same text inputs (or prompts) in their API calls like when they’re editing code or chatting with a bot. This feature allows them to reuse those recent prompts.

Benefits:

- Cost Savings: Developers get a 50% discount on the cost of using input tokens (the parts of text the model processes) that have been cached. This means they can save money while still getting the same outputs.

- Faster Processing: Using cached prompts speeds up the response time from the model, making applications run more efficiently.

Pricing Overview:

Here’s how the pricing works for different models when using cached versus uncached tokens:

| Model | Uncached Input Tokens | Cached Input Tokens | Output Tokens |

|---|---|---|---|

| GPT-4o | $2.50 | $1.25 | $10.00 |

| GPT-4o Fine-Tuning | $3.75 | $1.875 | $15.00 |

| GPT-4o Mini | $0.15 | $0.075 | $0.60 |

| GPT-4o Mini Fine-Tuning | $0.30 | $0.15 | $1.20 |

| o1 / o1-preview | $15.00 | $7.50 | $60.00 |

| o1 Mini | $3.00 | $1.50 | $12.00 |

How It Works:

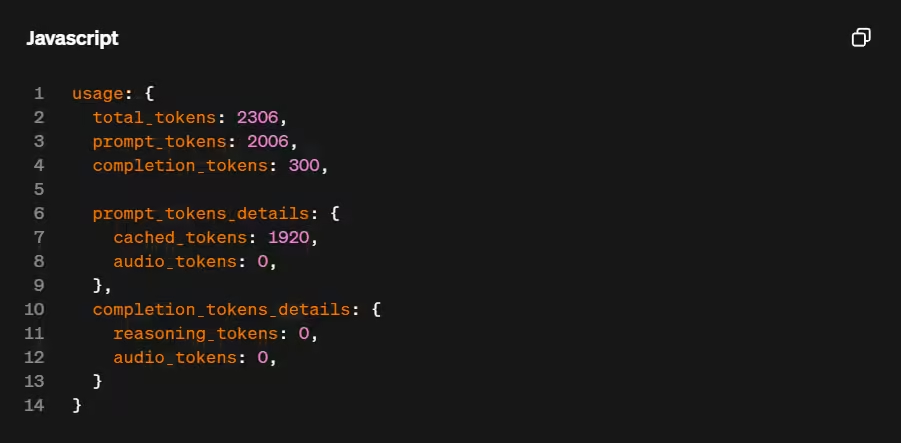

- Automatic Caching: Prompt Caching is automatically applied for supported models when the prompts are longer than 1,024 tokens. The API remembers and caches the longest part of the prompt that has been processed, allowing the reuse of common prefixes in subsequent requests.

- Cache Usage Monitoring: When using the API, the response will include a value that shows how many tokens were cached, helping developers track their savings.

Cache Clearing:

Cached data usually stays active for 5-10 minutes after the last use, but it will be cleared within one hour if not used. The caching is private and it’s not shared between different organizations, ensuring data security.

Prompt Caching is designed to help developers improve the performance and cost-effectiveness of their applications by leveraging previously processed prompts, making it easier to build scalable AI solutions. For more details, developers can check out the Prompt Caching docs.

Final Thoughts

OpenAI’s recent API launches bring exciting new features to help developers create better AI applications. The ChatGPT, DALL·E, Whisper, and Multi-modal APIs serve different needs, allowing for improved user experiences. With tools like Model Distillation and Vision Fine-Tuning, developers can make AI models work more efficiently and effectively.

The Realtime API enables natural conversations, making interactions smoother, while Prompt Caching helps save time and money by reusing common inputs. These tools allow businesses to engage users better and improve workflows.

By using these APIs, developers can explore new ideas, from interactive customer service to creative image and voice processing. OpenAI’s latest offerings provide the flexibility needed to enhance AI projects. Check out the documentation and start experimenting to see how these powerful tools can elevate your work!