OCR (Optical Character Recognition) technology has been a cornerstone in the evolution of data digitization, transforming text images into editable and searchable content. Historically, OCR systems have transitioned from rudimentary methods capable of recognizing basic text to advanced solutions designed to interpret diverse characters and formats.

However, the traditional OCR-1.0 systems, while foundational, often require multiple models to handle varied text recognition tasks, leading to increased complexity and maintenance challenges.

The Limitations of OCR-1.0 Systems

OCR-1.0 systems, characterized by their modular architectures, process images through distinct phases of detection, cropping, and recognition. Although these systems served specific needs, their application was often restricted to particular types of text and formats. This resulted in a fragmented approach where different OCR models were required for different tasks—such as recognizing handwritten text, mathematical equations, or musical notations—thereby complicating the user experience and increasing costs.

Moreover, these traditional systems were hindered by their inability to efficiently generalize across different text types and formats. The need for multiple, specialized models often led to inefficiencies and errors during integration, exacerbating maintenance issues and limiting their versatility.

Enter OCR-2.0: The General OCR Theory (GOT) Model

In response to the shortcomings of OCR-1.0, a groundbreaking advancement has emerged in the form of the General OCR Theory (GOT) model, introduced by researchers from StepFun, Megvii Technology, the University of Chinese Academy of Sciences, and Tsinghua University. As a pivotal component of OCR-2.0, GOT represents a significant leap forward in text recognition technology.

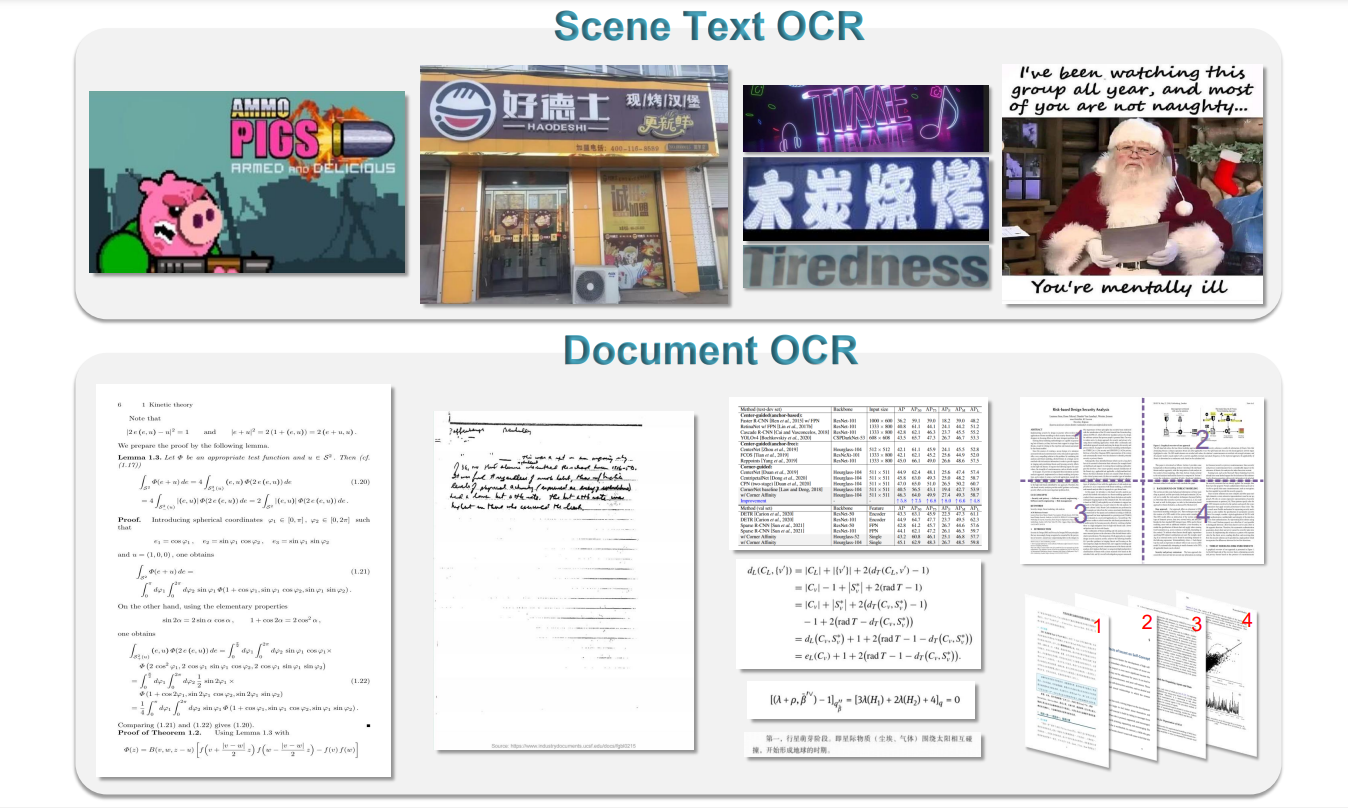

GOT is designed to unify and streamline OCR tasks into a single, end-to-end solution, eliminating the need for multiple, task-specific models. Its versatility allows it to handle a wide array of text formats, including plain text, complex formulas, charts, and geometric shapes, across both scene text and document-style images.

Architecture and Key Features

The GOT model boasts a sophisticated architecture comprising a high-compression encoder and a long-context decoder, totaling 580 million parameters. The encoder efficiently compresses input images—whether they are small slices or full-page scans—into 256 tokens of 1,024 dimensions each. The decoder, with a capacity for up to 8,000 maximum-length tokens, generates the OCR output. This architecture enables GOT to process large and intricate images with remarkable accuracy and efficiency.

One of the standout features of GOT is its support for generating formatted outputs in Markdown or LaTeX, making it exceptionally suited for scientific papers and mathematical content. Additionally, the model offers interactive OCR capabilities, allowing users to specify regions of interest through coordinates or colors, further enhancing its adaptability.

Performance and Advancements

In rigorous testing, GOT demonstrated superior performance compared to existing models such as UReader and LLaVA-NeXT. For English document-level OCR, GOT achieved an impressive F1-score of 0.952, outpacing models with billions of parameters while utilizing only 580 million parameters. The model excelled in Chinese document-level OCR as well, with an F1-score of 0.961. Its performance in scene-text OCR tasks was equally notable, with precision rates of 0.926 for English and 0.934 for Chinese.

GOT’s ability to handle complex characters, including those found in musical scores and geometric shapes, underscores its advanced capabilities. The model also performs exceptionally well in recognizing mathematical and molecular formulas, highlighting its broad applicability.

Dynamic Resolution and Multi-Page OCR

The GOT model incorporates dynamic resolution strategies and multi-page OCR technology, making it highly practical for real-world applications. It employs a sliding window approach to process extreme-resolution images, such as horizontally stitched two-page documents, without sacrificing accuracy.

The training process for GOT involved three stages: pre-training the vision encoder with 80 million parameters, joint training with the 580-million-parameter decoder, and post-training to enhance generalization. This comprehensive training regimen included a diverse set of datasets, featuring 5 million image-text pairs representing English and Chinese text, as well as synthetic data for mathematical formulas and tables.

Conclusion

GOT represents a transformative advancement in OCR technology, addressing the limitations of traditional OCR-1.0 models and overcoming the inefficiencies of current Large Vision-Language Models (LVLMs). By offering a unified, end-to-end solution capable of handling a diverse range of text formats with high precision and lower computational costs, GOT sets a new standard for OCR technology.

Its advanced features, including dynamic resolution and multi-page OCR capabilities, enhance its practicality and effectiveness in real-world applications, positioning it as a revolutionary tool in the field of text recognition. For more information about the GOT model and its capabilities, stay tuned to updates from the research teams and industry experts.

Awesome blog you have here but I was wanting to know if you knew of any discussion boards that cover the same topics discussed here? I’d really like to be a part of online community where I can get feed-back from other experienced people that share the same interest. If you have any recommendations, please let me know. Cheers!