Table of Contents

ToggleGoogle has launched significant enhancements to its Gemini models, launching the Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002. This release comes with exciting features designed to improve performance and reduce costs for developers:

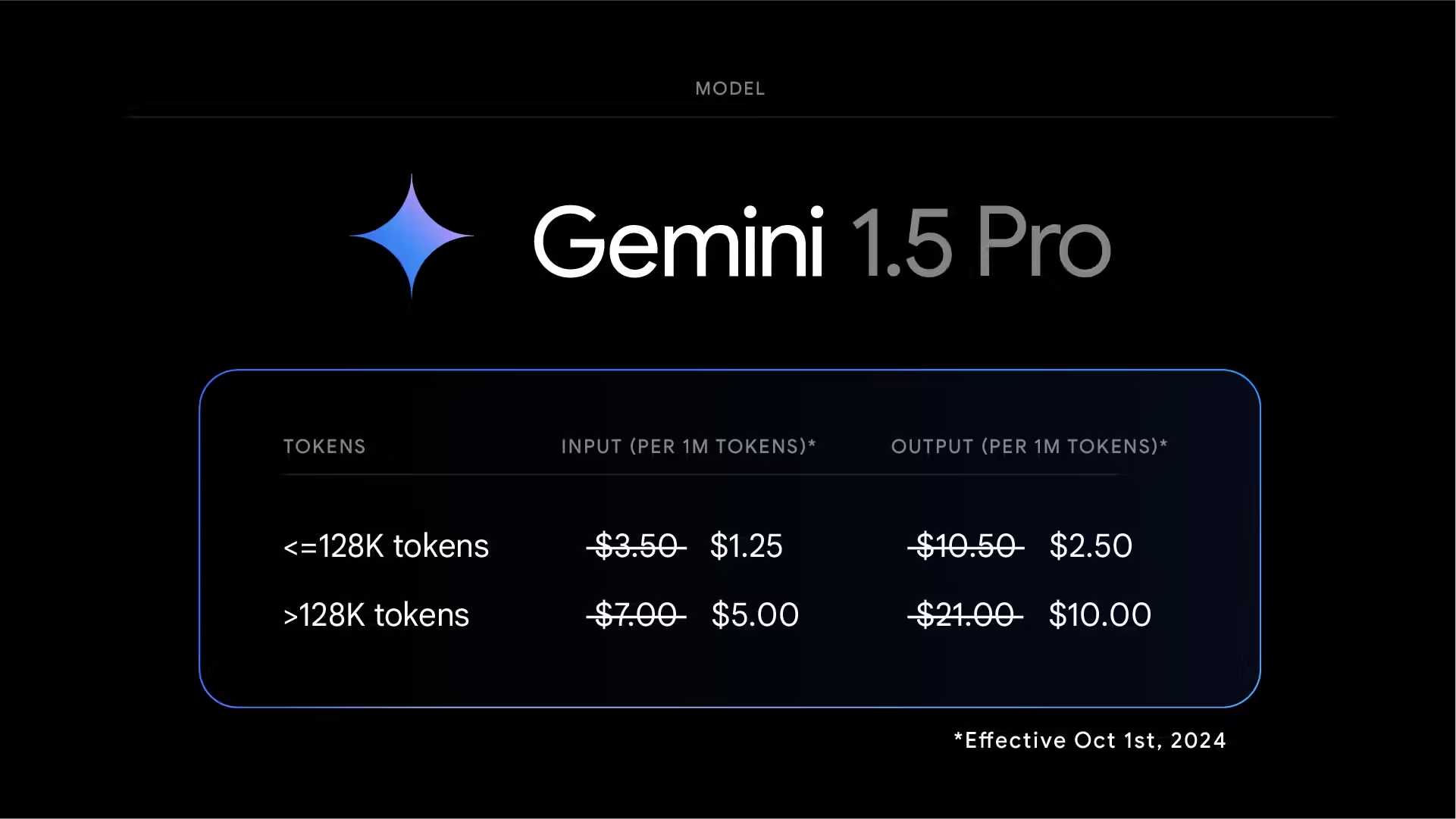

- Price Reductions: Input and output costs for the 1.5 Pro model are now reduced by 50% for prompts under 128K tokens.

- Increased Rate Limits: Developers will benefit from 2x higher rate limits for 1.5 Flash and nearly 3x higher for 1.5 Pro.

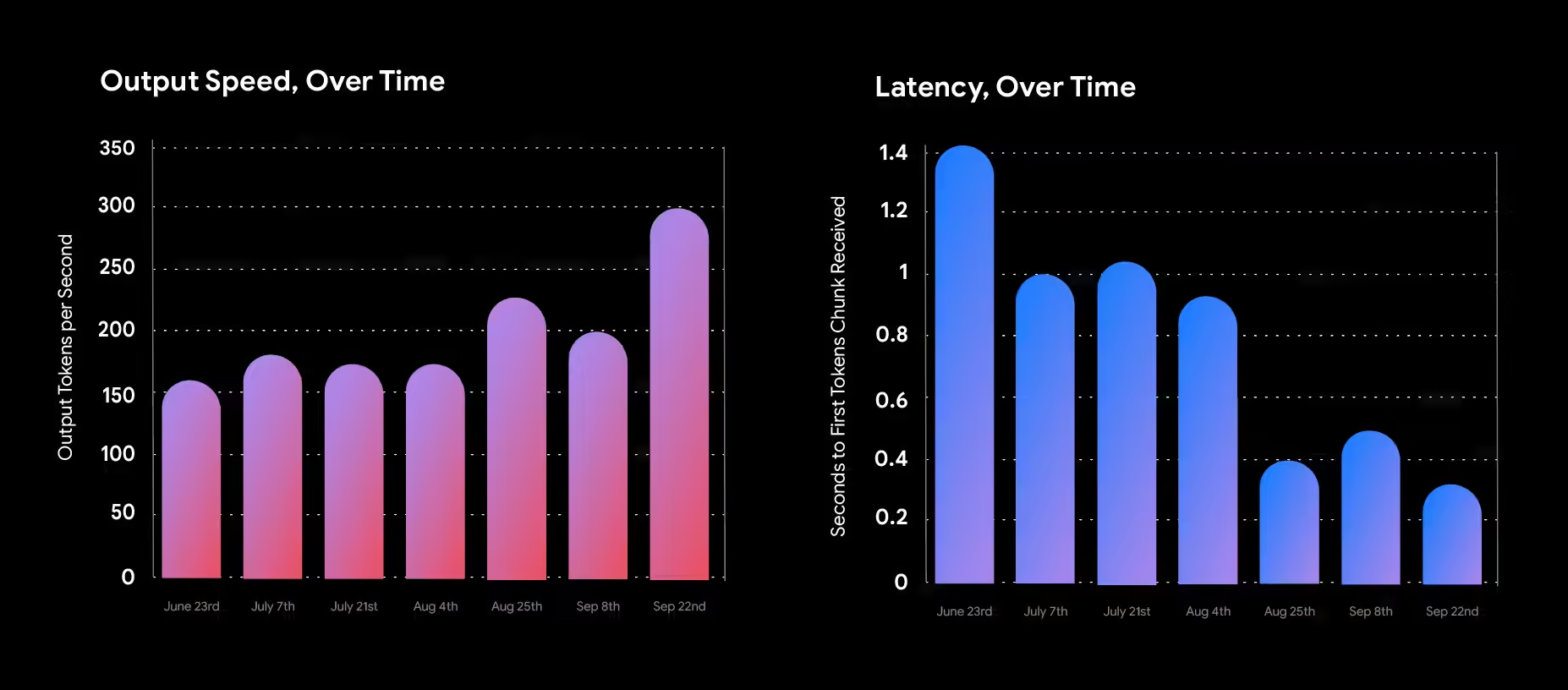

- Performance Boosts: The models now deliver outputs twice as fast with three times lower latency.

- Updated Default Filters: Enhanced filter settings aim to improve user experience and safety.

These new models build on the experimental releases shared at Google I/O in May, offering developers access to powerful capabilities via Google AI Studio and the Gemini API. Larger organizations can also access them through Vertex AI.

Improved Quality and Performance

The Gemini 1.5 series is designed to excel in a variety of tasks, including complex data synthesis and content generation from lengthy documents. Notable improvements have been observed in performance metrics:

- A 7% increase in MMLU-Pro scores.

- A substantial 20% improvement on math-focused benchmarks.

- Enhanced vision and code generation performance, with improvements ranging from 2-7%.

The updates also focus on providing more concise and helpful responses while maintaining strict content safety standards. This means fewer instances of response refusals and greater overall helpfulness.

Major Pricing Changes

Effective October 1, 2024, the Gemini 1.5 Pro model will see a 64% reduction in input tokens and 52% in output tokens. This pricing strategy aims to lower the costs associated with high-context applications, making it easier for developers to innovate.

| Model | Input Cost (per million tokens) | Output Cost (per million tokens) |

|---|---|---|

| Gemini 1.5 Pro | Reduced by 64% | Reduced by 52% |

| Gemini 1.5 Flash | Pricing adjustments forthcoming | TBD |

Enhanced Rate Limits and Latency

To facilitate more extensive usage, the rate limits have been increased significantly:

- 1.5 Flash: Up to 2,000 requests per minute (RPM).

- 1.5 Pro: Now at 1,000 RPM, up from the previous 360.

Additionally, the latency has been significantly reduced, with the latest models delivering outputs more swiftly than before, enabling developers to explore new use cases.

Focus on Safety and Reliability

In alignment with its commitment to safety, the updated models allow developers more control over filter settings, enhancing the balance between usability and safety. Default filters will not be applied automatically, allowing for customization based on specific project requirements.

Experimental Model Enhancements

Alongside these updates, Google is introducing the Gemini-1.5-Flash-8B-Exp-0924, an improved version of the previously announced model. This experimental release has shown substantial performance gains across text and multimodal tasks, reflecting the positive feedback from developers.

Looking Ahead

Google expresses enthusiasm for these updates and anticipates innovative applications from developers leveraging the new Gemini models. Upcoming features for Gemini Advanced users will include a chat-optimized version of Gemini 1.5 Pro-002.

For more information on migrating to the latest versions and utilizing these features, visit the Gemini API models page.

[…] the key points and cybersecurity threats from the HP Wolf Security Threat Insights Report – September 2024 for an easier […]

[…] about whether AWS was slow to introduce generative AI tools, leaving space for competitors like Google Cloud and Microsoft Azure. Garman countered this notion, explaining that AWS had already been offering […]