Generative AI, known for producing striking images on demand, is being used in a new way: to train robots how to move and act. Researchers from Stephen James’s Robot Learning Lab in London have developed a system called Genima, which fine-tunes image-generating AI models, like Stable Diffusion, to simulate robotic movements.

Genima, short for Generative Image as Action Models, is a behavior-cloning agent that fine-tunes Stable Diffusion (a popular image generation model) to generate “joint-actions” in images. These actions are used for visuomotor control, specifically targeting manipulation tasks in robotics.

This breakthrough, to be presented at the Conference on Robot Learning (CoRL) next month, could revolutionize the way robots are trained to complete tasks, from mechanical arms to driverless cars.

How Genima Works

Traditionally, robots learn tasks by processing images of their environment and converting this visual data into actions. Genima, however, takes a unique approach by using visual data for both input and output, which simplifies the learning process for machines.

The system overlays data from robot sensors onto images of the robot’s environment, with colored spheres representing the movements a robot’s joints need to make. For example, if a robot needs to pick up an object, the spheres show where its joints should move next.

Here’s a breakdown of how it works:

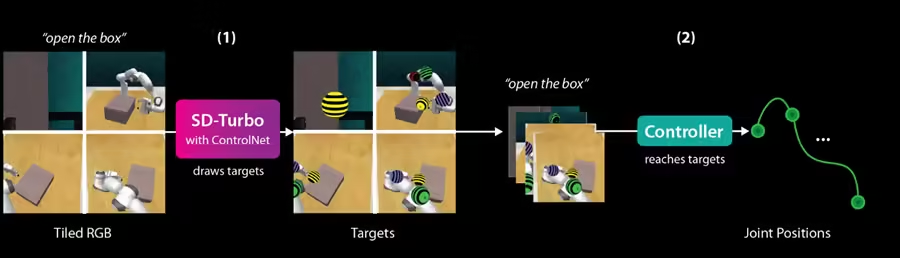

- Behavior Cloning: Genima uses behavior cloning to map visual observations (RGB images) and language goals to robotic joint actions.

- Two-Stage Process:

- Diffusion Agent: The system fine-tunes Stable Diffusion Turbo with ControlNet to generate target images. These images display future joint-positions in the form of colored spheres (representing base, elbow, wrist, and gripper).

- ACT-based Controller: These target images are then fed into a controller that translates them into a sequence of joint actions. The controller learns to focus on these targets while ignoring the scene’s background.

- Action-Generation as Image-Generation: Instead of relying on conventional 3D models or keypoints, Genima formulates action generation as an image-generation task. By using pre-trained internet diffusion models (Stable Diffusion), it generalizes well to novel objects and environmental changes.

- Benchmarking: Genima has been tested on 25 RLBench (robotic) tasks and 9 real-world manipulation tasks, often outperforming state-of-the-art visuomotor approaches. It’s particularly robust in dealing with scene perturbations (like object color or lighting changes) and out-of-distribution tasks.

- Emergent Properties: Genima shows emergent behaviors like re-rendering inputs into canonical forms, making it more adaptable to variations in the environment.

In short, Genima innovatively applies image-generation models to the domain of robotic control, lifting actions into the image space and demonstrating significant potential in manipulation tasks.

Applications in Robotics

Genima’s innovative use of generative AI isn’t just limited to one type of robot. Researchers believe the system can train everything from robotic arms in factories to humanoid robots and autonomous vehicles.

“The approach is more interpretable for users,” says Ivan Kapelyukh, a PhD student in robot learning, “allowing you to predict a robot’s actions before it happens, reducing errors.”

Testing and Success Rates

To validate Genima’s effectiveness, researchers used the system to complete 25 simulated tasks and 9 real-world tasks using a robot arm. The success rates were 50% and 64%, respectively. Though these numbers show room for improvement, the researchers believe they can enhance the system’s speed and accuracy by applying it to video-generation models, which can predict sequences of future actions rather than just single steps.

Future Potential

The team is optimistic about Genima’s potential for training robots to perform a variety of tasks, such as folding laundry or opening drawers. Its adaptability means it could be used across a range of industries, from domestic robots to industrial machines.

“This could be a general way to train all kinds of robots,” says Zoey Chen, a PhD student from the University of Washington.

Conclusion

As generative AI models like Stable Diffusion evolve, their ability to train robots opens up exciting possibilities for more autonomous and capable machines. With continued research, systems like Genima may pave the way for robots to handle more complex tasks, leading to advancements in both home and industrial robotics.