Recent advancements in AI are showing that large language models (LLMs), which were originally designed for tasks like generating text, can do much more. Researchers are now exploring whether these models can also act as low-level controllers for video games.

This study, known as the Atari-GPT experiment, focuses on how well LLMs, like GPT-4V and Gemini 1.5 Flash, can perform in Atari video games compared to human players and reinforcement learning (RL) agents, which are typically better at such tasks.

From High-Level Thinking to Low-Level Control

Usually, large language models are used for tasks that require high-level planning. In these tasks, AI makes general decisions and outlines strategies. For example, in robotics, AI would plan how to achieve a goal rather than controlling each movement of the robot. However, in video games, low-level control is required.

This means the AI needs to make decisions for every action, like when to move, jump, or shoot. Traditional reinforcement learning (RL) agents excel in this area because they learn by trial and error, while LLMs are not specifically designed for this.

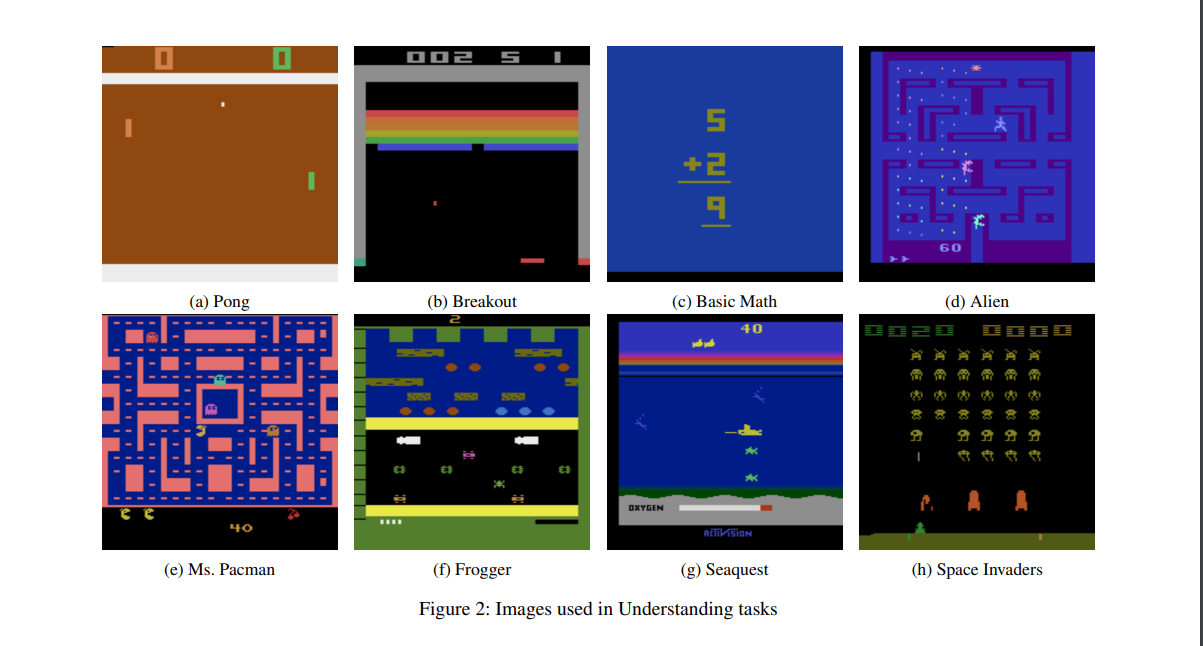

The researchers in the Atari-GPT study tested how well LLMs could handle the fast and complex environments of these Atari games. The goal was to see if LLMs could decide in real-time what action to take, such as moving left or firing at an enemy, based on what they see on the screen.

Combining Visual Inputs and Learning from Examples

One of the main features of this experiment was using multimodal inputs. This means that the AI models were given both text and images to process. In this case, they were provided with images from the game and then had to make decisions based on those images. This allowed the AI to “see” what’s happening in the game, similar to how a human would play.

The researchers also used a technique called In-Context Learning (ICL), where the AI was shown examples of human gameplay before being asked to play the game itself. The idea was that this would help the AI understand the game better. However, even with these examples, the AI models didn’t perform much better. The study showed that while the AI could process the visual data and understand the game elements, it struggled to make quick and accurate decisions during gameplay.

Performance Comparison: LLMs vs. Humans and RL Agents

The researchers compared the performance of the LLMs with human players and RL agents. Unsurprisingly, human players and RL agents performed much better than the LLMs. RL agents, which are specifically trained for these tasks, outshined LLMs in games like Space Invaders and Ms. Pacman, where quick reactions and good strategy are essential.

For example, in Space Invaders, RL agents could react quickly to enemy movements and optimize their actions based on what was happening on the screen. In contrast, LLMs often made slower or incorrect decisions, which led to lower scores. The same was seen in Ms. Pacman, where the LLMs failed to plan properly to avoid the ghosts or gather the dots, leading to frequent losses.

Even though the LLMs lagged behind, they showed some ability to understand the game layout. They were able to recognize objects in the game, like enemies and the player’s character, and understood the basic rules of the game. However, translating this understanding into real-time action was difficult for them.

What’s Next for LLMs in Gaming?

The Atari-GPT study provides valuable insights into the strengths and weaknesses of using large language models for tasks that require fast and accurate decision-making. The LLMs can understand visual information and even make strategic decisions, but they are not yet ready to replace human players or RL agents in video games.

One possible way to improve this is to combine the best of both worlds, using the high-level planning abilities of LLMs and the fast action-response skills of RL agents. This combination could create an AI system that can plan, strategize, and act quickly, making it more suitable for video games and other dynamic tasks.

Conclusion

The Atari-GPT experiment shows that while LLMs are highly capable in many areas, they are not yet proficient in fast-paced, real-time environments like video games. However, their ability to understand and process visual information is promising, and with further research, they may be able to improve in tasks requiring quick decisions and real-time control.

[…] Led by Jaime A. Berkovich and Markus J. Buehler, LifeGPT can predict the behavior of Conway’s Game of Life without knowing the grid size or […]