AI is constantly evolving, especially with large language models (LLMs) that are changing many fields. A recent research paper called “Automating Thought of Search: A Journey Towards Soundness and Completeness,” written by Daniel Cao, Michael Katz, Harsha Kokel, Kavitha Srinivas, and Shirin Sohrabi, introduces an exciting new system called AutoToS.

This system automates search and planning processes without needing human help. AutoToS marks an important step forward in AI planning and opens up new possibilities for automation.

Key Takeaways

- AutoToS automates the AI planning process, removing human involvement and achieving 100% accuracy.

- The system uses unit tests to provide feedback on the soundness and completeness of LLM-generated search components.

- AutoToS is efficient, requiring minimal feedback iterations and working well across different LLM models.

- Future developments could include automating unit test generation and fine-tuning smaller models for enhanced efficiency.

The Shift from Human Feedback to Automation

Methodology: Automating Feedback Through Unit Tests

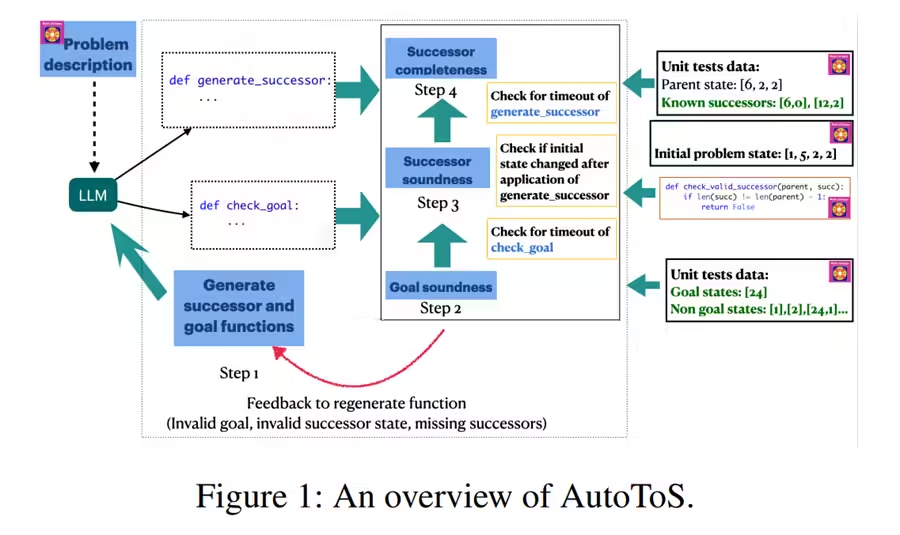

AutoToS removes human involvement by using unit tests and automated feedback to ensure that the LLM-generated search components are accurate. This system builds upon the ToS model by guiding the AI step-by-step in generating sound and complete successor functions and goal tests.

The process involves multiple iterations of feedback until the LLM produces accurate results.

Initial Prompt: The process begins with an initial prompt where the LLM is asked to generate a Python function for the successor and goal tests.

Goal Function Check: The system performs unit tests on the goal function to ensure it correctly identifies goal and non-goal states. If a failure is detected, the system provides feedback to the model, prompting it to revise the function until all unit tests pass.

Successor Function Soundness and Completeness Check: The successor function undergoes multiple tests, including checks for soundness and completeness. Soundness ensures that the generated successor states are valid, while completeness ensures that all possible successors are generated. Feedback is provided to the LLM if errors are detected, prompting revisions.

The use of both domain-specific and generic unit tests allows the system to provide precise feedback, leading to highly accurate code generation by the LLMs.

Results: 100% Accuracy Across Multiple Domains

AutoToS was tested on five representative search problems: BlocksWorld, PrOntoQA, Mini Crosswords, the 24 Game, and Sokoban. The results were impressive, with AutoToS achieving 100% accuracy across all evaluated domains, rivaling or even surpassing human-in-the-loop models like ToS.

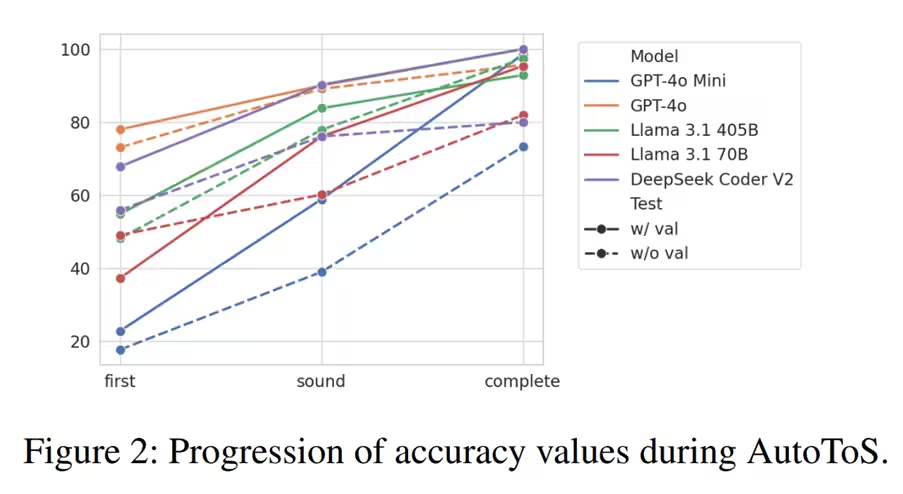

One of the critical findings is that the total number of calls to the LLMs in AutoToS is comparable to the human-involved ToS method, highlighting the efficiency of the automated system. The authors also conducted an ablation study to confirm the importance of soundness and completeness feedback in improving the accuracy of the generated code.

Moreover, AutoToS is shown to be adaptable, as it works across various LLMs, ranging from small models like GPT-4o-mini to larger models like Llama3.1-405b. The study found no significant performance gaps between these models, suggesting that AutoToS could be efficient even with smaller, less expensive models.

Key Findings and Future Directions

- Automated Search: AutoToS successfully automates the search process, eliminating the need for human experts to provide feedback, a major improvement over previous models.

- Efficiency: The system achieves high accuracy with a minimal number of feedback iterations, showing that fully automated search planning is both feasible and effective.

- Model Versatility: AutoToS performs consistently well across various LLMs, indicating that the system is versatile and can work with different model architectures.

The table below summarizes the core aspects of the research:

| Problem | Methodology | Result | Conclusion |

|---|---|---|---|

| AI planning with LLMs | Automated search component generation | 100% accuracy across domains | AutoToS effectively automates AI planning without human input |

| Successor function | Unit tests for soundness and completeness | Reduced feedback iterations | Automated feedback leads to efficient, accurate code generation |

| Goal function validation | Iterative feedback through unit tests | Correct goal state identification | Feedback loops ensure sound and complete search components |

Conclusion

The AutoToS system represents a significant advancement in AI planning by fully automating the process of generating sound and complete search components. This innovation not only improves efficiency but also removes the need for human experts to guide the search process. As AI models continue to evolve, systems like AutoToS will play a crucial role in making complex problem-solving more accessible and scalable.

Future research could explore extending AutoToS to generate the unit tests automatically or applying the system to more complex planning problems. Additionally, fine-tuning smaller LLMs to match or exceed the performance of larger models could make the system even more efficient.

Final Thoughts

The research presented in this paper showcases how AI planning can evolve through automation, providing a glimpse into the future of AI-driven problem-solving. AutoToS is a step forward in reducing the dependency on human expertise and increasing the reliability and efficiency of search algorithms, setting the stage for more advanced AI applications in various fields.

[…] research shows that artificial intelligence (AI) bots can now solve image-based CAPTCHAs with a perfect 100% success rate. CAPTCHAs are used on […]